How do we learn to use words?

The importance of emotion for communication

Augustine of Hippo lived over 1600 years ago, but his memories of language learning probably still resonate with many of us:

When my elders named some object, and accordingly moved towards something, I saw this and I grasped that the thing was called by the sound they uttered when they meant to point it out…. Thus, as I heard words repeatedly used in their proper places in various sentences, I gradually learned to understand what objects they signified.1

We assume that children need only hear a word paired with an appropriate object to learn what it means, that humans are somehow naturally equipped to use this raw information to build phrases, sentences, and create meaning. This, for example, is the well-known view of linguist Noam Chomsky, who claimed that all humans have an innate “language acquisition device” that automatically matches the speech they hear to the correct meanings.

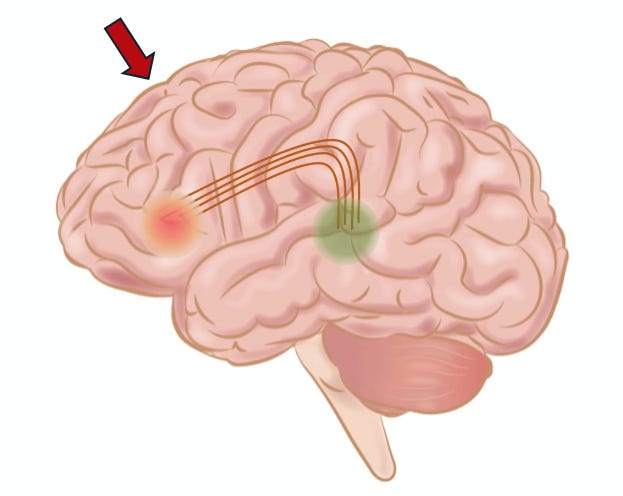

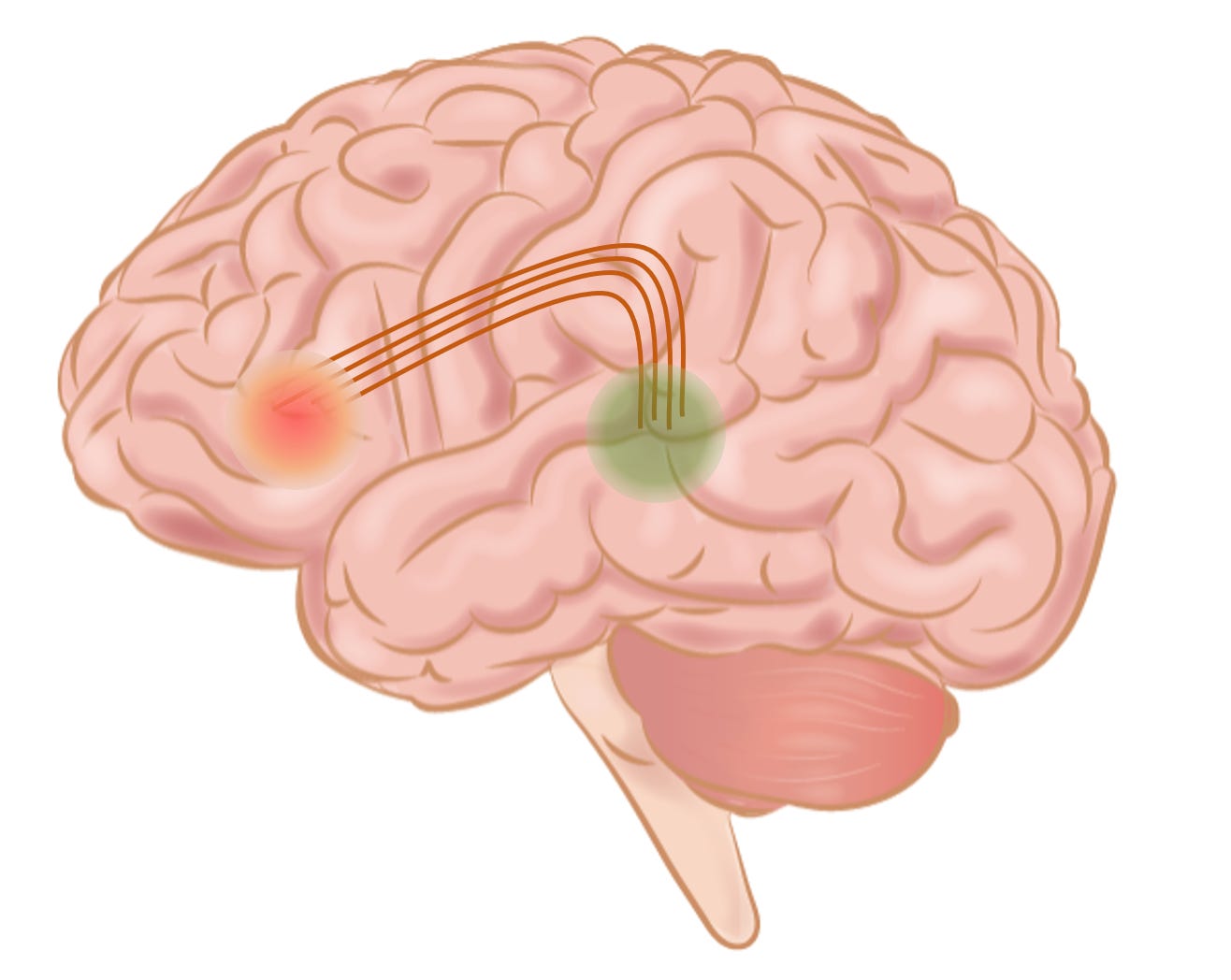

This is also largely what is taught in medical school and neuroscience courses. The classical model of language is that we comprehend speech via a region in the temporal lobe called “Wernicke’s area”, after the precocious 19th-century neurologist who discovered it at age 26. This region parses the flow of sounds into syllables, and then transmits this information to “Broca’s area” in the frontal lobe which coordinates the vocal and mouth movements necessary to form words. The entire network only needs to hear the appropriate input to gradually learn a language.

But this seemingly intuitive approach ignores a crucial point (and see this post for another twist). How does a child— or even a linguist, for that matter— know what part of an experience a particular word indicates? The philosopher Willard Van Orman Quine gave the classic example of this.2 Suppose you are a linguist and you hear one of the natives say “gavagai!” as a rabbit runs past. You may interpret “gavagai” as meaning “rabbit”, but it might as easily mean “that’s for dinner”, “so fast!”, or even “where is it going?” For infants, the problem is compounded as they have no primary language to even act as a reference.

The answer is that learning a language is not originally a logical process, but an emotional one. Children are not miniature linguists, matching words to objects the way Augustine assumes. Rather, we learn language through a remarkably emotional experience of sharing excitement and attention with others— and which involves parts of the brain quite remote from the traditional “language network”. Language is just the tip of our communicative skills.

In fact, children’s first words (or word-like sounds) arise not from overhearing adults or from explicit teaching but rather their own exclamations of delight. Starting from just a few months after birth, children engage in a variety of “games” with adults. The earliest versions are face-to-face games like peekaboo or hand-clapping, but around eight or nine months these evolve into mutual play with objects and toys. Most of these have the same format: the child reaches for an object, tries to attract the attention of the adult who helps the child obtain the object, and then both take turns holding and manipulating it. Seen from afar, it is almost a silent conversation “about” the object.

However, important parts of this game are far from silent. For example, how does the child initially attract the adult’s attention? Usually, this is through sound: a brief cry or shout to express delight and engage the adult. But over time, two changes occur. First, the adult will use the correct name for the object as they turn towards the child— and the child will begin to imitate and incorporate these sounds into their exclamations. Second, as the child matures, the adult will only “reward” them with attention if their exclamation is closer to the correct word than before. At nine months “cuh” might be enough, but by two or three years only “car” will suffice.

A classic example of this was described by the American psychologist Jerome Bruner, in his extended study of two children in their first years of life.3 The infant Richard would initially point to anything he wanted to share with his parents with a shout of “da!” Over time, this sound evolved into different variants based on location, and Richard would specify anything above his head with a point and “boe!”. Finally, this became the word “bird”, which was initially used for anything flying but gradually came to specify warm-blooded egg-laying vertebrates with wings and feathers.

Of course, at some point (usually in the second year) this process becomes much less laborious and children learn “the trick” that they can direct the adult’s attention to anything in the world with the appropriate word. But even the rapid expansion of vocabulary after this point depends upon the emotional, turn-taking games of infancy. For example, while many nouns are notoriously over-applied by children— for many months or years “dog” or “cat” mean any kind of four-legged animal— they almost never confuse “I/me” and “you”, even though both pronouns can describe the same person. The reason is that the “I” and “you” roles are more fundamental than language: for the infant, “I” exclaim to attract “your” attention, long before I know what I am exclaiming about.4

Why does this matter for the brain? A closer look at the classical “language network” suggests that something is missing. As usually described, the language network perceives words in the temporal lobe, transmits them to the lateral frontal lobe, and combines them to form new sentences. From this perspective, it is not much different from chatGPT or computer speech processing. What is absent is the motive for communication in the first place— the adult equivalent of the child’s exclamation of delight that is eventually shaped into words. But where in the brain is the impetus to speak, if not in the language network?

In children, the brain locus for these emotional exclamations during play or mutual attention is outside the traditional language network in the medial prefrontal cortex, or mPFC.5 The mPFC receives inputs from the emotional regions of the limbic system and sends outputs to the more traditional speech areas, acting as a bridge between motivation and vocalization. In other animals, a similar region is responsible for calls during grooming or social touch (many of which, interestingly, are outside of the range of human hearing).6

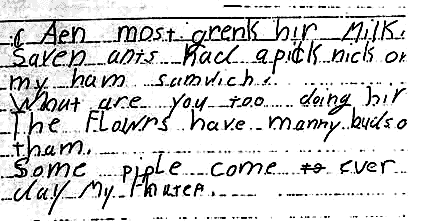

The mPFC is not just a transient bridge during language learning, but continues to play a crucial (but overlooked) role in adult speech different from that of the traditional language areas. Adults with damage in the traditional regions have aphasia, or the inability to form and understand words and sentences appropriately. Speech might be halting, or words might be gibberish— in a particularly common variety, speech can be so jumbled that clinicians sometimes call it “word salad”. But no matter how incomprehensible, patients with aphasia are always trying to communicate: they gesture, laugh, make eye contact, and attempt to engage others.

In contrast, adults with injury to the mPFC do not attempt to communicate at all. Questions are at most answered in monosyllables, and only if asked forcefully. With milder lesions, patients can be cajoled into repeating a sentence (and can do it correctly), but with the largest lesions, they sit for hours impassively, hardly moving and never speaking in a state called “akinetic mutism”. One patient, after partially recovering from this state, recalled that his problem was simply that “thoughts [did] not enter my head”.7

And the role of this region is apparent in healthy adults as well. The sociologist Erving Goffman famously described how adult interactions are “framed” by the same sorts of emotional cues that children use to engage with their parents.8 Throughout our conversations, we habitually make eye contact, smile, emit listening sounds like “ah” or “mm-hmm”, and modulate our tone of voice to keep the interaction flowing smoothly. All of these motivate us to say more and to explore new topics, and all require the mPFC and not the traditional language areas.9 An impassive face, or a bored glance, can abruptly halt a conversation and create a brief moment of akinetic mutism even without a brain lesion.

We thus do not grow up as Augustine’s miniature linguists, logically piecing together the meanings of words from the data provided by adults. And our language systems are not neural versions of chatGPT, transforming verbal input into verbal output by rote. Rather, language grows out of emotion and shared delight in the world. We speak not to name, describe, and classify, but to wonder, joke, excite, and exclaim.

Augustine, Confessions, I.8

Quine, Willard Van Orman (1960): Word and Object. MIT Press

Bruner, J. S. (1983). Child’s Talk: Learning to Use Words. New York: Norton. pp. 74-6.

Kaye, Kenneth. The Mental and Social Life of Babies. University of Chicago Press, 1982, pp. 234-5. Kaye also raises the perceptive point that language in general would be easier without pronouns: I could just say “Matt wants a cup of tea; does Susan want one too?” rather the trickier “I” and “you”. The fact that these words exist at all is a testament to the origins of language in reciprocal social interactions.

Grossmann, Tobias. "The role of medial prefrontal cortex in early social cognition." Frontiers in human neuroscience 7 (2013): 340.

Burgdorf, Jeffrey, Jaak Panksepp, and Joseph R. Moskal. "Frequency-modulated 50 kHz ultrasonic vocalizations: a tool for uncovering the molecular substrates of positive affect." Neuroscience & Biobehavioral Reviews 35.9 (2011): 1831-1836.

Goldberg, Gary. "Supplementary motor area structure and function: review and hypotheses." Behavioral and brain Sciences 8.4 (1985): 567-588.

Goffman, Erving. Interaction ritual: Essays in face-to-face behavior. Routledge, 2017.

Mitchell, Rachel LC, and Elliott D. Ross. "Attitudinal prosody: What we know and directions for future study." Neuroscience & Biobehavioral Reviews 37.3 (2013): 471-479.

Once again, an interesting article.